Simulating and compensating changes in appearance between day and night vision

ACM SIGGRAPH 2014 / ACM Transactions on Graphics

Robert Wanat and Rafał K. Mantiuk

Motivation

Modern displays, such as the ones used in phones or tablets, are designed to work in bright and dark environments. When used in bright conditions, these devices increase screen brightness. However, when the used in dim conditions, such as indoors when the lights are dimmed, it is beneficial to reduce the screen brightness. Doing so reduces the strain on the eyes and lowers the power consumption. However, below a certain brightness level (luminance) the image quality suffers, as the human visual system can no longer retain normal colour and detail perception. This makes small details invisible and causes washed-out colours. The method we propose can compensate for the appearance changes, making it possible to reduce screen brightness and thus lower both the eye strain and power consumption, and still preserve high image quality.

Applications

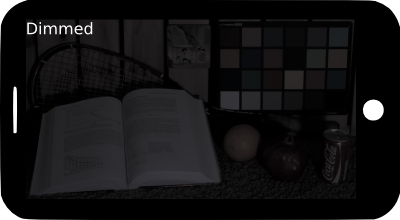

Illustration 2: Simulation of image appearance on a dark display. Slide the mouse cursor over the image to see a simulation of what it would look like after processing.

The method enables a range of applications, including:

Dark display

The method enables dimming of a display, such as in mobile devices but also in TV sets, to very low peak luminance level; for example from 400 cd/m2 to 4 cd/m2. This results in 100-times lower power consumption of the display unit, which is responsible for over 50% of all power consumption is most mobile devices. Unlike existing display dimming techniques, which offer only moderate brightness reduction, our method enables for dimming to very low brightness levels while actively compensating for changes in colour and detail appearance. The method is computationally efficient and can be easily implemented as real-time post-processing performed on a mobile GPU, as demonstrated in our proof-of-concept Android application. Illustration 1 shows the images produced when simulating (left) or compensating (right) a dark display. Illustration 2 simulates the appearance of an dimmed image before and after compensation (note that more details will be visible on a dim display viewed in darkness).

Age-adaptive compensation

Illustration 3: Results of compensation based on viewer's age.

Because our method relies on a model of contrast sensitivity, it can be easily extended to account for the differences in acuity and sensitivity between young and elderly observers. In Illustration 3 we show an image compensation for a dimmed 10 cd/m2 peak luminance display tailored for 20-year old and 80-year old observers. Typically little compensation is needed for the 20-year old, but details and brightness must be boosted for the older observer.

Illustration 4: Comparison of original image (left) and the image rendered as accurate representation of night scene (right). The image courtesy of Kirk and O'Brien.

Illustration 5: Original image (left) and creative rendering as night scene (right).

Reproduction of night scenes

Our method can also render images of night scenes to reproduce their appearance on much brighter displays. Illustration 2 shows an example of a night-time photograph and its rendering produced by our method. Note that the loss of acuity, changes in brightness and colour appearance.

Creative rendering of night scenes

The actual appearance change due to low luminance is often subtle. To achieve a more dramatic effect in entertainment applications, where perceptual accuracy is not crucial, it is often desirable to alter the appearance above the level predicted by the visual model. This is shown in the right image of Illustration 5, where we adjusted parameters to show an excessive change of image appearance.

Video

The method

We propose an automatic method of preserving contrast and colour appearance across a wide range of luminance levels by matching image appearance between day and night vision.

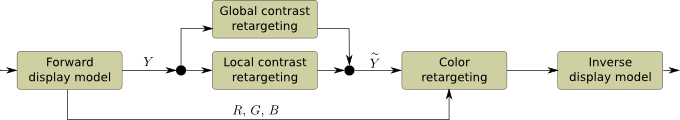

As shown in Illustration 6, the method consist of three main processing blocks: retargeting of global contrast to preserve overall brightness,retarageting of local contrast to preserve detail visibility, and retargeting of colors to preserve overall colourfulness and account for the Purkinje shift (shift of the hues towards blue at low luminance).

The result of each step is achieved as optimization of the appearance match between two viewing conditions, usually one dark and the other bright. The appearance match in contrast is based on a relatively unknown vision studies, which proposed a simple contrast appearance matching model based on a contrast sensitivity function (CSF). The appearance match in colour is based on the models of rod and cone contribution to color (LMS) visual pathways and our own new measurements and models.

In more detail

The input of the algorithm can be a linear RGB image, in which case it has to be passed through the forward display model to calculate the physical properties of the image. Alternately, the input image can be an HDR image, in which case it is assumed to be the input of the source display. Next, the luminance image is decomposed into a Laplacian pyramid. The base band of the pyramid is passed through the global contrast retargetting step that aims at reproducing the relative brightness of large image areas. All pyramid layers apart from base band are passed through local contrast retargetting step to accurately reproduce the visibility of small details. The results from both contrast retargetting step are then recomposed into a single luminance image which is then used in the colour retargetting step. In this step, the rod input at low luminance is compensated for by altering the RGB values relative to luminance. A power function is then applied to each colour channel to model the saturation changes caused by the reduced sensitivity of cones at low luminance. Finally, both RGB and luminance values are merged and the resulting image is passed through the inverse display model, thus producing the final result of our algorithm.

Paper and additional materials

Simulating and compensating changes in appearance between day and night vision

Robert Wanat and Rafał K. Mantiuk

To appear in: Proc. Of ACM SIGGRAPH 2014 / ACM Transactions on Graphics (TOG) Volume 33, Issue 4

Contact

Rafał Mantiuk

mantiuk@bangor.ac.uk